rdcu.be/cbBRc

Preview meta tags from the rdcu.be website.

Thumbnail

Search Engine Appearance

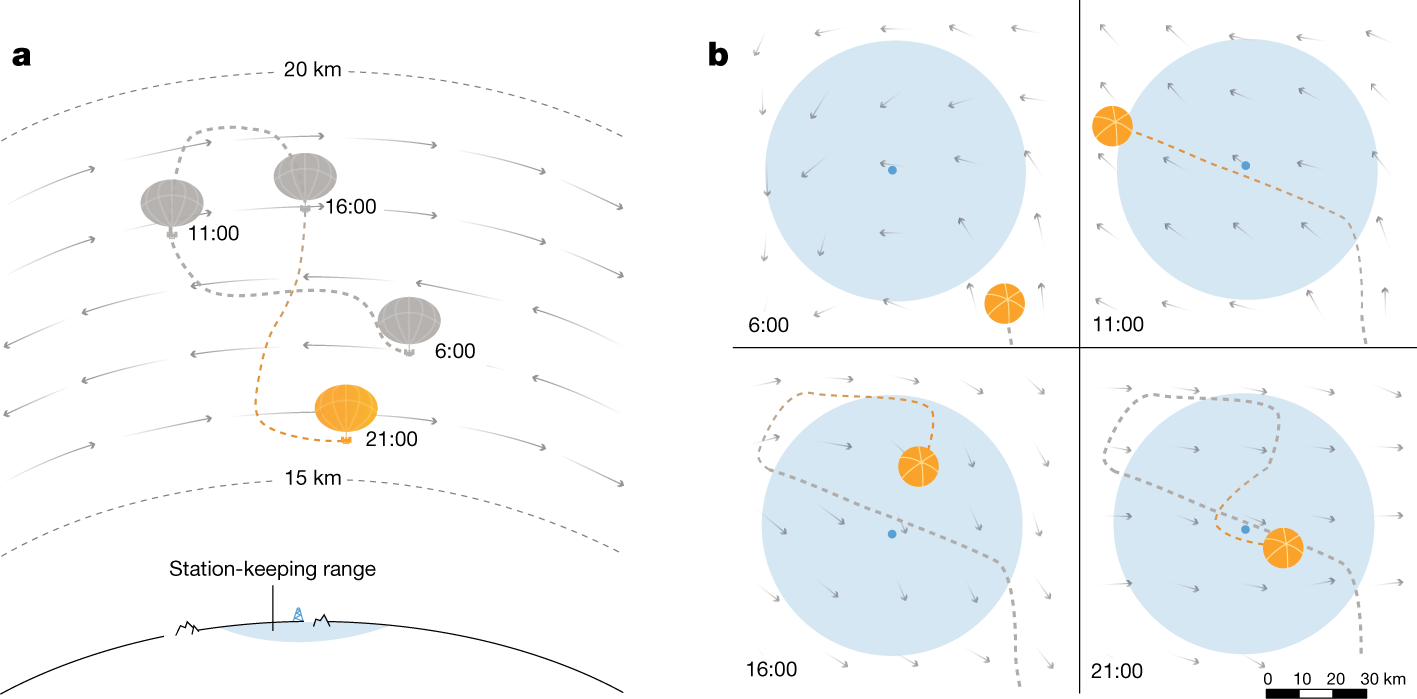

Autonomous navigation of stratospheric balloons using reinforcement learning

Efficiently navigating a superpressure balloon in the stratosphere1 requires the integration of a multitude of cues, such as wind speed and solar elevation, and the process is complicated by forecast errors and sparse wind measurements. Coupled with the need to make decisions in real time, these factors rule out the use of conventional control techniques2,3. Here we describe the use of reinforcement learning4,5 to create a high-performing flight controller. Our algorithm uses data augmentation6,7 and a self-correcting design to overcome the key technical challenge of reinforcement learning from imperfect data, which has proved to be a major obstacle to its application to physical systems8. We deployed our controller to station Loon superpressure balloons at multiple locations across the globe, including a 39-day controlled experiment over the Pacific Ocean. Analyses show that the controller outperforms Loon’s previous algorithm and is robust to the natural diversity in stratospheric winds. These results demonstrate that reinforcement learning is an effective solution to real-world autonomous control problems in which neither conventional methods nor human intervention suffice, offering clues about what may be needed to create artificially intelligent agents that continuously interact with real, dynamic environments. Data augmentation and a self-correcting design are used to develop a reinforcement-learning algorithm for the autonomous navigation of Loon superpressure balloons in challenging stratospheric weather conditions.

Bing

Autonomous navigation of stratospheric balloons using reinforcement learning

Efficiently navigating a superpressure balloon in the stratosphere1 requires the integration of a multitude of cues, such as wind speed and solar elevation, and the process is complicated by forecast errors and sparse wind measurements. Coupled with the need to make decisions in real time, these factors rule out the use of conventional control techniques2,3. Here we describe the use of reinforcement learning4,5 to create a high-performing flight controller. Our algorithm uses data augmentation6,7 and a self-correcting design to overcome the key technical challenge of reinforcement learning from imperfect data, which has proved to be a major obstacle to its application to physical systems8. We deployed our controller to station Loon superpressure balloons at multiple locations across the globe, including a 39-day controlled experiment over the Pacific Ocean. Analyses show that the controller outperforms Loon’s previous algorithm and is robust to the natural diversity in stratospheric winds. These results demonstrate that reinforcement learning is an effective solution to real-world autonomous control problems in which neither conventional methods nor human intervention suffice, offering clues about what may be needed to create artificially intelligent agents that continuously interact with real, dynamic environments. Data augmentation and a self-correcting design are used to develop a reinforcement-learning algorithm for the autonomous navigation of Loon superpressure balloons in challenging stratospheric weather conditions.

DuckDuckGo

Autonomous navigation of stratospheric balloons using reinforcement learning

Efficiently navigating a superpressure balloon in the stratosphere1 requires the integration of a multitude of cues, such as wind speed and solar elevation, and the process is complicated by forecast errors and sparse wind measurements. Coupled with the need to make decisions in real time, these factors rule out the use of conventional control techniques2,3. Here we describe the use of reinforcement learning4,5 to create a high-performing flight controller. Our algorithm uses data augmentation6,7 and a self-correcting design to overcome the key technical challenge of reinforcement learning from imperfect data, which has proved to be a major obstacle to its application to physical systems8. We deployed our controller to station Loon superpressure balloons at multiple locations across the globe, including a 39-day controlled experiment over the Pacific Ocean. Analyses show that the controller outperforms Loon’s previous algorithm and is robust to the natural diversity in stratospheric winds. These results demonstrate that reinforcement learning is an effective solution to real-world autonomous control problems in which neither conventional methods nor human intervention suffice, offering clues about what may be needed to create artificially intelligent agents that continuously interact with real, dynamic environments. Data augmentation and a self-correcting design are used to develop a reinforcement-learning algorithm for the autonomous navigation of Loon superpressure balloons in challenging stratospheric weather conditions.

General Meta Tags

129- titleAutonomous navigation of stratospheric balloons using reinforcement learning | Nature

- journal_id41586

- dc.titleAutonomous navigation of stratospheric balloons using reinforcement learning

- dc.sourceNature 2020 588:7836

- dc.formattext/html

Twitter Meta Tags

6- twitter:site@nature

- twitter:cardsummary_large_image

- twitter:image:altContent cover image

- twitter:titleAutonomous navigation of stratospheric balloons using reinforcement learning

- twitter:descriptionNature - Data augmentation and a self-correcting design are used to develop a reinforcement-learning algorithm for the autonomous navigation of Loon superpressure balloons in challenging...

Link Tags

1- canonical/articles/s41586-020-2939-8