d2nerf.github.io

Preview meta tags from the d2nerf.github.io website.

Linked Hostnames

8- 2 links towww.cl.cam.ac.uk

- 1 link toarxiv.org

- 1 link tochikayan.github.io

- 1 link todorverbin.github.io

- 1 link todrive.google.com

- 1 link togithub.com

- 1 link topeople.csail.mit.edu

- 1 link totaiya.github.io

Thumbnail

Search Engine Appearance

D^2NeRF: Self-Supervised Decoupling of Dynamic and Static Objects from a Monocular Video

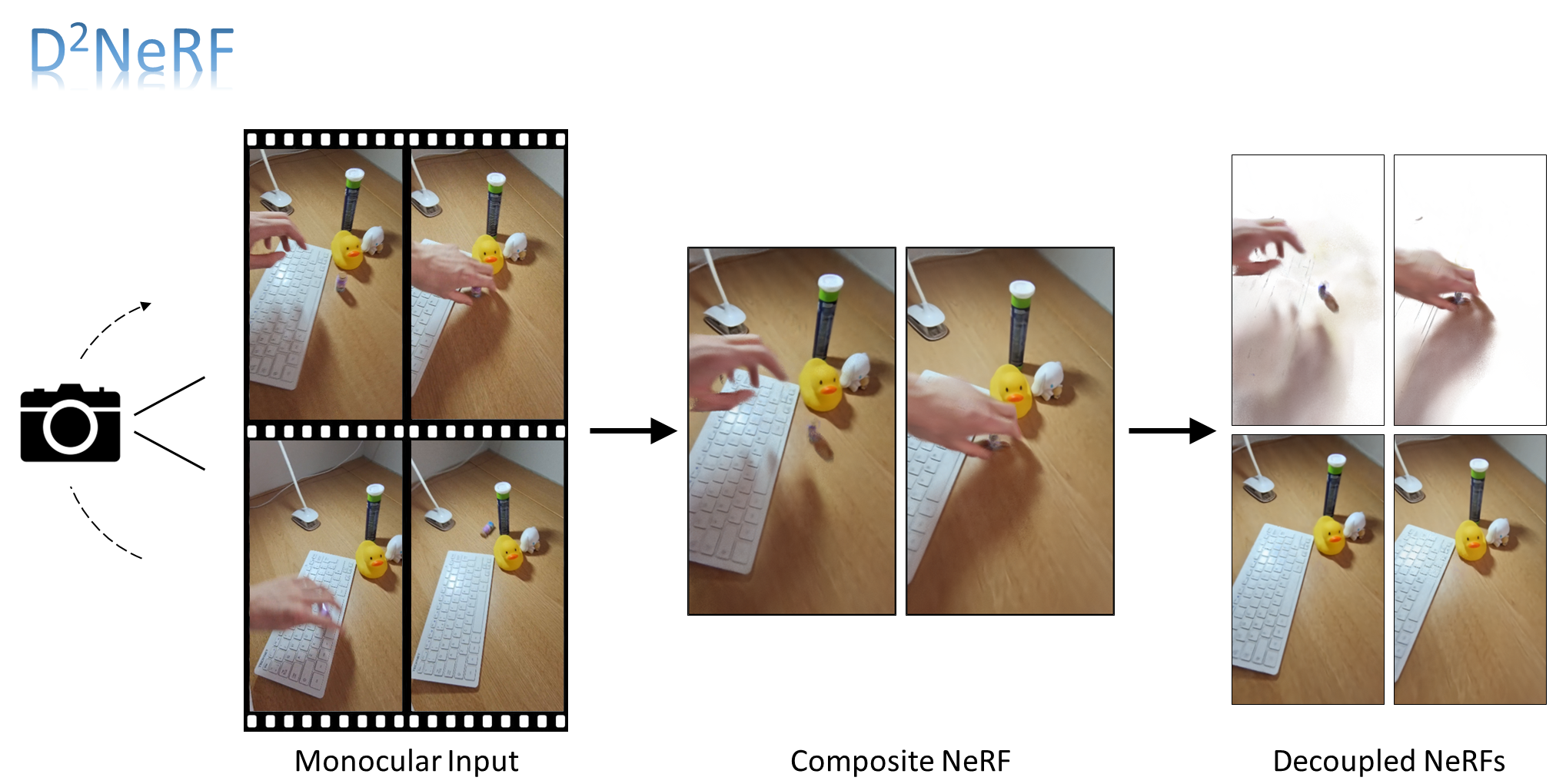

Given a monocular video, segmenting and decoupling dynamic objects while recovering the static environment is a widely studied problem in machine intelligence. Existing solutions usually approach this problem in the image domain, limiting their performance and understanding of the environment. We introduce Decoupled Dynamic Neural Radiance Field (D^2NeRF), a self-supervised approach that takes a monocular video and learns a 3D scene representation which decouples moving objects, including their shadows, from the static background. Our method represents the moving objects and the static background by two separate neural radiance fields with only one allowing for temporal changes. A naive implementation of this approach leads to the dynamic component taking over the static one as the representation of the former is inherently more general and prone to overfitting. To this end, we propose a novel loss to promote correct separation of phenomena. We further propose a shadow field network to detect and decouple dynamically moving shadows. We introduce a new dataset containing various dynamic objects and shadows and demonstrate that our method can achieve better performance than state-of-the-art approaches in decoupling dynamic and static 3D objects, occlusion and shadow removal, and image segmentation for moving objects.

Bing

D^2NeRF: Self-Supervised Decoupling of Dynamic and Static Objects from a Monocular Video

Given a monocular video, segmenting and decoupling dynamic objects while recovering the static environment is a widely studied problem in machine intelligence. Existing solutions usually approach this problem in the image domain, limiting their performance and understanding of the environment. We introduce Decoupled Dynamic Neural Radiance Field (D^2NeRF), a self-supervised approach that takes a monocular video and learns a 3D scene representation which decouples moving objects, including their shadows, from the static background. Our method represents the moving objects and the static background by two separate neural radiance fields with only one allowing for temporal changes. A naive implementation of this approach leads to the dynamic component taking over the static one as the representation of the former is inherently more general and prone to overfitting. To this end, we propose a novel loss to promote correct separation of phenomena. We further propose a shadow field network to detect and decouple dynamically moving shadows. We introduce a new dataset containing various dynamic objects and shadows and demonstrate that our method can achieve better performance than state-of-the-art approaches in decoupling dynamic and static 3D objects, occlusion and shadow removal, and image segmentation for moving objects.

DuckDuckGo

D^2NeRF: Self-Supervised Decoupling of Dynamic and Static Objects from a Monocular Video

Given a monocular video, segmenting and decoupling dynamic objects while recovering the static environment is a widely studied problem in machine intelligence. Existing solutions usually approach this problem in the image domain, limiting their performance and understanding of the environment. We introduce Decoupled Dynamic Neural Radiance Field (D^2NeRF), a self-supervised approach that takes a monocular video and learns a 3D scene representation which decouples moving objects, including their shadows, from the static background. Our method represents the moving objects and the static background by two separate neural radiance fields with only one allowing for temporal changes. A naive implementation of this approach leads to the dynamic component taking over the static one as the representation of the former is inherently more general and prone to overfitting. To this end, we propose a novel loss to promote correct separation of phenomena. We further propose a shadow field network to detect and decouple dynamically moving shadows. We introduce a new dataset containing various dynamic objects and shadows and demonstrate that our method can achieve better performance than state-of-the-art approaches in decoupling dynamic and static 3D objects, occlusion and shadow removal, and image segmentation for moving objects.

General Meta Tags

5- titleD^2NeRF

- Content-Typetext/html; charset=UTF-8

- x-ua-compatibleie=edge

- description

- viewportwidth=device-width, initial-scale=1

Open Graph Meta Tags

8- og:imagehttps://d2nerf.github.io/img/title_card.png

- og:image:typeimage/png

- og:image:width1200

- og:image:height630

- og:typewebsite

Twitter Meta Tags

4- twitter:cardsummary_large_image

- twitter:titleD^2NeRF: Self-Supervised Decoupling of Dynamic and Static Objects from a Monocular Video

- twitter:descriptionGiven a monocular video, segmenting and decoupling dynamic objects while recovering the static environment is a widely studied problem in machine intelligence. Existing solutions usually approach this problem in the image domain, limiting their performance and understanding of the environment. We introduce Decoupled Dynamic Neural Radiance Field (D^2NeRF), a self-supervised approach that takes a monocular video and learns a 3D scene representation which decouples moving objects, including their shadows, from the static background. Our method represents the moving objects and the static background by two separate neural radiance fields with only one allowing for temporal changes. A naive implementation of this approach leads to the dynamic component taking over the static one as the representation of the former is inherently more general and prone to overfitting. To this end, we propose a novel loss to promote correct separation of phenomena. We further propose a shadow field network to detect and decouple dynamically moving shadows. We introduce a new dataset containing various dynamic objects and shadows and demonstrate that our method can achieve better performance than state-of-the-art approaches in decoupling dynamic and static 3D objects, occlusion and shadow removal, and image segmentation for moving objects.

- twitter:imagehttps://d2nerf.github.io/img/title_card.png

Link Tags

5- icondata:image/svg+xml,<svg xmlns=%22http://www.w3.org/2000/svg%22 viewBox=%220 0 100 100%22><text y=%22.9em%22 font-size=%2290%22>%E2%9C%A8</text></svg>

- stylesheetcss/bootstrap.min.css

- stylesheetcss/font-awesome.min.css

- stylesheetcss/codemirror.min.css

- stylesheetcss/app.css

Links

9- https://arxiv.org/abs/2205.15838

- https://chikayan.github.io

- https://dorverbin.github.io/refnerf

- https://drive.google.com/drive/folders/1qm-8P6UqrhimZXp4USzFPumyfu8l1vto?usp=sharing

- https://github.com/d2nerf/d2nerf